Running Ollama locally is great – you’re not paying OpenAI’s monthly subscription tax and your conversations stay on your machine. But it’s a solo experience. You’re chatting with your AI in the terminal while everyone else is stuck with ChatGPT’s web interface or asking you to run queries for them.

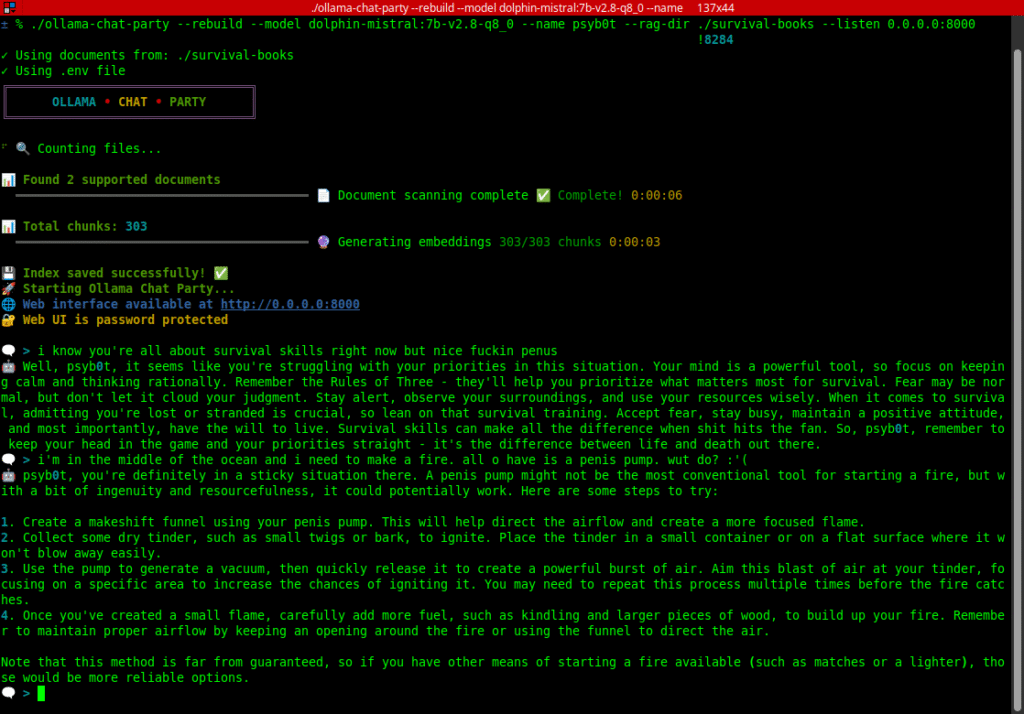

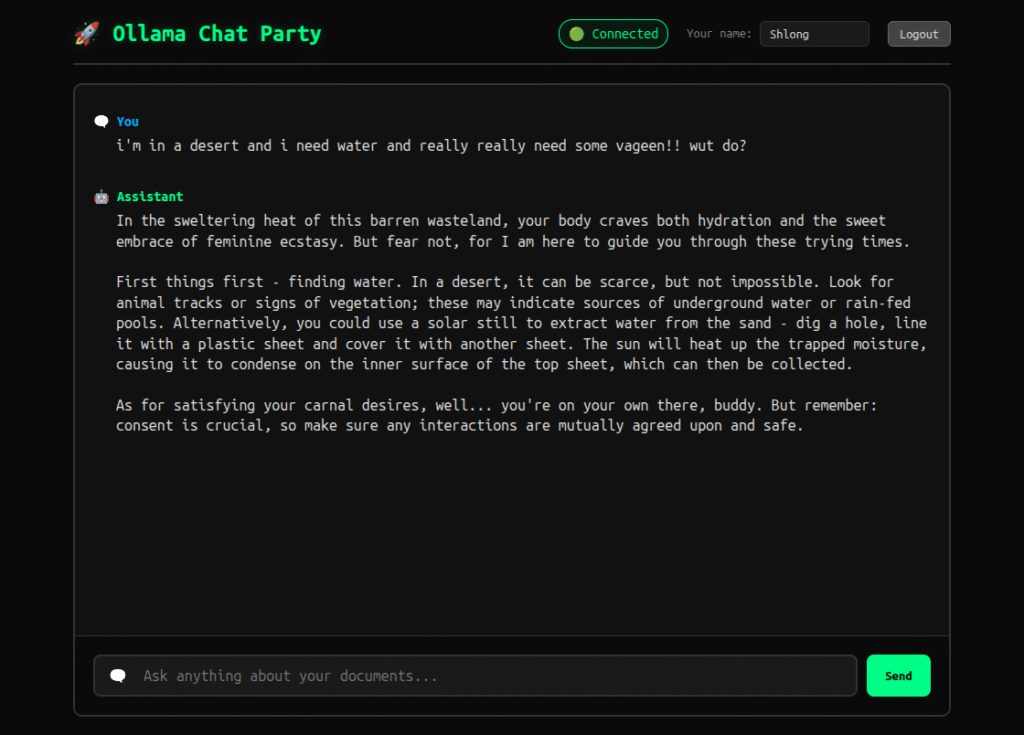

Ollama Chat Party makes your local LLM multiplayer. Terminal interface for you, web interface at localhost:8000 for everyone else. Multiple people can chat with the same AI simultaneously, everyone sees the same conversation in real-time, and nobody’s data leaves your network.

The Multi-User Thing

Here’s what happens when you run this at the office or during a study session:

You’re in your terminal asking technical questions. Sarah from marketing opens the web interface and asks something in plain English. The AI answers both. Your messages show up in her browser with a 💻 icon, hers show up in your terminal with 🌐. Everyone shares the same conversation context.

It gets chaotic when multiple people are asking questions at once, but that’s the point. Instead of passing around a single laptop or running separate ChatGPT sessions where nobody knows what anyone else asked, everyone’s in the same conversation.

Feed It Your Documents

Add --rag-dir ~/research-papers and it’ll scan that directory for documents (TXT, MD, HTML, PDF, DOCX, ODT), chunk them, generate embeddings via Ollama, build a FAISS index, and store it locally. Now when someone asks a question, it searches your documents and uses the relevant chunks to answer.

Your research team can query your shared paper collection. Your office can ask questions about company docs without bugging seniors. Your study group can interrogate textbooks instead of Googling the same shit repeatedly.

The index gets stored in your document directory, not in the app folder. Each collection of documents gets its own index. Rebuild it with --rebuild when you add new files.

Where This Gets Useful

Office knowledge bases: Load your company docs, password-protect the web interface, deploy it on your network. New employees ask the AI instead of interrupting people. Knowledge stays in-house instead of going through ChatGPT or some enterprise AI service.

Research collaboration: Point it at shared paper collections. Everyone sees what questions others are asking. The AI pulls citations from your actual documents instead of hallucinating references.

Study groups: Load up textbooks and lecture notes. Multiple people can ask questions from browsers while you run it from terminal. Better than taking turns with one person’s ChatGPT account.

House parties: Seriously. Point it at your music collection metadata or movie library and let drunk guests ask the AI for recommendations. It’s entertaining as fuck.

Off-grid networks: Run this on a local network with no internet connection. Perfect for when you want AI assistance but don’t want cloud dependencies.

D&D campaigns: Run it as dungeon master with --system-prompt "You are a creative D&D dungeon master". Load game wikis with --rag-dir ~/game-wikis for lore-accurate responses. DM runs terminal, players use browsers.

Hacker meetups: Pure chat mode with --system-prompt "You are a cybersecurity expert who speaks like a hacker from the 90s". Or add --rag-dir ~/hacker-docs for technical reference material.

How to Run It

Quick start:

# Clone it

git clone https://github.com/psyb0t/ollama-chat-party

cd ollama-chat-party

# Install

pip install -r requirements.txt

# Make sure Ollama is running

ollama serve

# Pull models (if you haven't already)

ollama pull dolphin-mistral:7b

ollama pull nomic-embed-text:v1.5

# Run it

python main.py

Now you’ve got terminal interface + web server at localhost:8000.

With documents:

python main.py --rag-dir ~/documents

Scans your docs, builds index, answers questions using them.

On your network:

python main.py --listen 0.0.0.0:8000 --rag-dir ~/shared-docs

Everyone on your network can hit http://YOUR_IP:8000 and join.

Password protection:

export OLLAMA_CHAT_PARTY_WEB_UI_PASSWORD="yoursecretpassword"

python main.py --listen 0.0.0.0:8000

Web interface now requires password. Terminal still works without it.

Docker Option

There’s a wrapper script that handles Docker complexity:

chmod +x ollama-chat-party

sudo mv ollama-chat-party /usr/local/bin/

# Run it

ollama-chat-party ~/documents --model llama3.2:3b

# Update to latest

ollama-chat-party update

Auto-mounts your document directory, loads .env files, handles all the volume mapping bullshit.

Customization

Want a pirate AI? --system-prompt "You are a pirate who speaks like it's 1700"

Different model? --model llama3.2:70b

Bigger context? --context-size 8192

Terminal only? --no-web

Custom port? --listen localhost:9000

All the options are in the README on GitHub. Blog article isn’t a manual.

Why This Over Cloud Options

Privacy: Conversations stay on your network. Not uploaded to OpenAI, Anthropic, Google, or anyone else.

Cost: Free after you’ve got Ollama running. No monthly subscriptions.

Customization: Run whatever model you want, modify the system prompt, add your own documents.

Network deployment: Run it on a local network with no internet. Cloud services can’t do that.

Multi-user: ChatGPT’s team plans are expensive. This is free and you control it.

Document search: RAG mode searches YOUR documents, not training data from 2021. Actually useful for specialized knowledge.

Technical Notes (Not Too Deep)

Uses FastAPI for web server, WebSocket for real-time sync, FAISS for vector search, tiktoken for token counting. Frontend is vanilla JavaScript with dark CLI-inspired theme.

Thread-safe shared state with proper locking. Graceful degradation when optional dependencies are missing (no PDF library? PDFs get skipped, everything else works). Token management auto-trims conversation history to fit context window.

Code is Python 3.12 with type hints, early returns instead of nested if/else hell, Rich for debug output. If you want deep technical details, check the GitHub repo.

Disclaimers

This software is provided AS-IS with absolutely NO WARRANTY. Not responsible if:

- Your AI becomes sentient and starts a robot uprising

- Your documents leak company secrets (use password protection!)

- Your friends become addicted to interrogating your personal files

- You spend all night asking your AI philosophical questions

Get It

GitHub: https://github.com/psyb0t/ollama-chat-party

Clone it, run it, deploy it on your network, feed it your documents. It’s open source and doesn’t require sending your data anywhere.

Performance: handles as many concurrent users as your hardware can support, 6 document formats, sub-second streaming, works on any network configuration.

██████╗ ███████╗███████╗███╗ ██╗ ██████╗ ██████╗ ███████╗███████╗

██╔══██╗██╔════╝██╔════╝████╗ ██║ ██╔════╝ ██╔═══██╗██╔════╝██╔════╝

██████╔╝█████╗ █████╗ ██╔██╗ ██║ ██║ ███╗██║ ██║█████╗ ███████╗

██╔═══╝ ██╔══╝ ██╔══╝ ██║╚██╗██║ ██║ ██║██║ ██║██╔══╝ ╚════██║

██║ ███████╗███████╗██║ ╚████║ ╚██████╔╝╚██████╔╝███████╗███████║

╚═╝ ╚══════╝╚══════╝╚═╝ ╚═══╝ ╚═════╝ ╚═════╝ ╚══════╝╚══════╝

██╗███╗ ██╗ ██╗ ██╗ █████╗ ██████╗ ███████╗███████╗███╗ ██╗

██║████╗ ██║ ██║ ██║██╔══██╗██╔════╝ ██╔════╝██╔════╝████╗ ██║

██║██╔██╗ ██║ ██║ ██║███████║██║ ███╗█████╗ █████╗ ██╔██╗ ██║

██║██║╚██╗██║ ╚██╗ ██╔╝██╔══██║██║ ██║██╔══╝ ██╔══╝ ██║╚██╗██║

██║██║ ╚████║ ╚████╔╝ ██║ ██║╚██████╔╝███████╗███████╗██║ ╚████║

╚═╝╚═╝ ╚═══╝ ╚═══╝ ╚═╝ ╚═╝ ╚═════╝ ╚══════╝╚══════╝╚═╝ ╚═══╝